Overview

I was a member of Team Marble (Multi-agent Autonomy with Radar-Based Localization for Exploration) working on multi-sensor fusion for perception and planning and control solutions for autonomous mobile robots. Team Marble stems for the Autonomous Robotics and Perception Group at the University of Colorado Boulder. I was part of Team Marble leading up the Tunnel event.

Team members: Gene Rush, Mike Miles, Andrew Mills (on the left from front to back) and Boston Cleek and Wyatt Raich (on the right side from left to right)

Tunnel Event

The Subterranean Challenge aims to conduct rapid and autonomous exploration in subterranean environments using intelligent systems capable of operating in complex, unpredictable, and diverse environments. The Tunnel event took place in a research mine in Pittsburgh August 15-22, 2019. A team of robots composed of three Clearpath Huskies and a vehicle named Ninja Car (custom built by the Autonomous Robotics and Perception Group) set out to explore the research mine. The robots explored the unkown environment while mapping the mine, searching for artifacts (i.e. backpack, person, fire extinguisher…), and accurately marking the location of the artifacts.

Testing

Testing was performed at the Edgar Research Mine in Idaho Springs, Colorado. We were concerned with the mapping abilities and how long the vehicle could drive autonomously without getting stuck.

The Ninja Car was driven in a rougher part of the mine to demonstrate traversability.

The Ninja Car driving autonomously down the 1300ft long Miami Section of Edgar Mine.

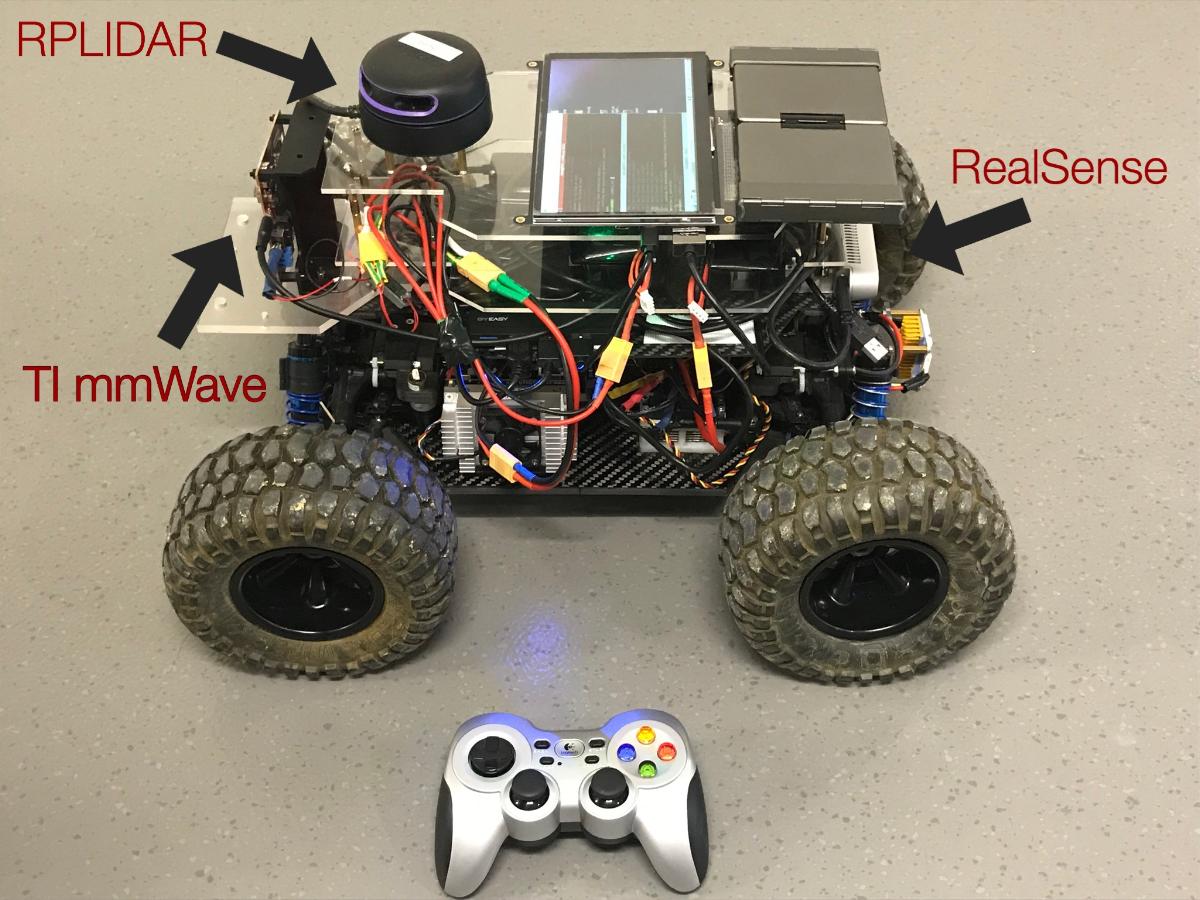

Ninja Car

Hardware

The NUC ran the autonomy stack (i.e. perception,planning and control). The TX2 executed YOLO Darknet for artifact detection.

Compute

- Intel NUC i7

- Nvidia Jetson TX2

Sensors

- RealSense d435

- LORD MicroStrain IMU

- TI mmWave radar board

- RPLIDAR A3

Perception

A variety of SLAM packages were tested to determine which packages gave the best results. The Ninja Car ran a visual-inertial SLAM package (using the RealSense camera and MicroStrain IMU) created by the ARPG lab called Compass. The controller depended heavily on velocity feedback. A velocity feedback package created by the ARPG lab called Goggles used a TI mmWave radar board. The TI mmWave radar board resulted in the most accurate velocity feedback.

Planning and Control

Initially, the Ninja Car control software included implementation of Model Predictive Control (MPC). The vehicle performed local planning between waypoints by generating a reference trajectory. The reference trajectory was a solution to the differential equations that modeled the vehicle dynamics. The solution was the result of a two point boundary value problem solved using a shooting method.

I primarily worked on extending the original 2D vehicle dynamics to 3D. The model was designed to yield high fidelity control solutions for dynamic driving on 3D meshes. MPC depended on Gauss-Newton optimization for control solutions. This numerical method was slow in practice, around 4 Hz using the 3D vehicle dynamics. I was working towards developing a stochastic optimal controller similar to the MPPI conroller used on the Georgia Tech Auto-Rally Car. The goal was to utilize highly parallelizable computer architectures such as GPU’s to develop a parallelizable stochastic search algorithm to find control solutions at a greater speed than traditional optimization algorithms.

The time frame leading up to the Tunnel Event was too short to develop the previously mentions controller. The Ninja Car ended up using a centering algorithm that sampled the point cloud from the RPLIDAR. At intersections the Ninja Car would take a random turn and at dead ends the car would turn around. This method was much simpler, did not include planning, and performed well during testing in the Edgar Mine. The car was capable of autonomously driving the Miami section (1300 ft long one way) of the Edgar Mine. This includes driving down and back as well as avoiding obstacles and making turns down different corridors.